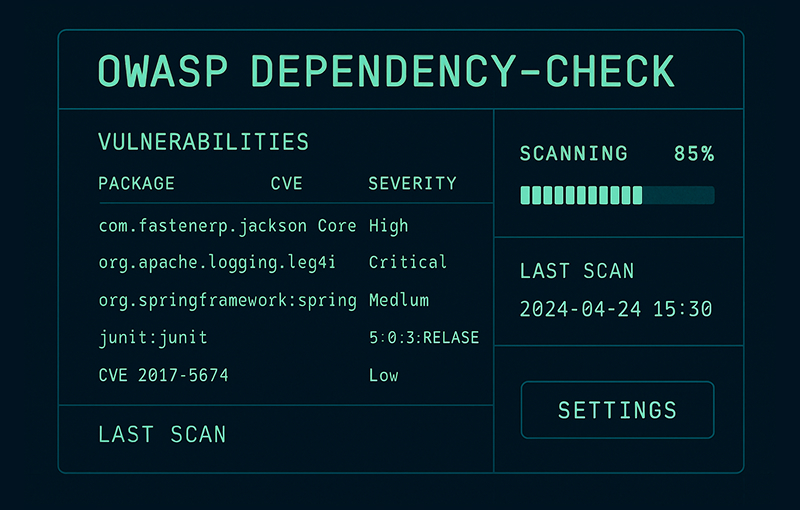

OWASP Dependency-Check is a free, open-source Software Composition Analysis (SCA) tool that acts as your first line of defence against vulnerable third-party components. Think of it as an automated security guard for your project’s dependencies, constantly checking them against public vulnerability databases like the National Vulnerability Database (NVD).

Modern software isn’t built from scratch. We assemble it using dozens, sometimes hundreds, of open-source libraries to move faster. But every one of those dependencies is a potential backdoor for an attacker. OWASP Dependency-Check systematically inventories these components and flags any with publicly known vulnerabilities, giving you a clear picture of your supply chain risk.

This isn’t just a reactive scan; it’s about shifting security left. By integrating dependency checking directly into your workflow, you move from panicked, post-breach fire-fighting to a proactive, continuous security posture. This is fundamental to building a secure software development life cycle.

For anyone building products for the European market, this kind of tooling is no longer just a best practice—it’s fast becoming a compliance necessity.

Meeting Modern Compliance Demands

Upcoming EU regulations like the Cyber Resilience Act (CRA) are set to make rigorous dependency scanning a mandatory part of placing a product on the market. The CRA demands transparency and proactive vulnerability management, and tools like Dependency-Check are perfectly positioned to help.

Ever since the 2013 OWASP Top 10 list highlighted the risk of “Using Components with Known Vulnerabilities,” this tool has been a cornerstone of the SCA world. Its core function—linking your components to specific Common Vulnerabilities and Exposures (CVEs)—is exactly what you need to generate the evidence and documentation required by the CRA. For teams in the ES region, it’s an essential part of preparing both software and hardware for market entry.

Practical Value That Prevents Fire Drills

The real-world value of Dependency-Check is its practicality. It stops the expensive chaos that erupts when a major vulnerability is found in a library you’re using after your product has shipped. For example, when the Log4Shell vulnerability (CVE-2021-44228) was discovered, teams with automated dependency scanning could instantly identify which of their applications used the vulnerable log4j library and deploy patches within hours, not weeks.

Instead of relying on slow, manual audits, you get automated, repeatable checks that fit right into your CI/CD pipeline. The outputs are tangible and immediately useful, including Software Bills of Materials (SBOMs), which are foundational for CRA compliance and demonstrating due diligence.

Key Takeaway: Adopting OWASP Dependency-Check isn’t just about finding bugs. It’s about building a documented, evidence-based security process that satisfies regulators, reassures customers, and helps your team sleep better at night.

Research has repeatedly shown that development teams who integrate tools like this significantly cut their exposure to vulnerabilities. It’s a direct and measurable improvement to your security posture. For a closer look at these findings and the tool’s relevance to regulations, check out the analysis on the OWASP blog.

To get a clearer picture of what this tool brings to the table, here’s a quick summary of its key features and how they map to compliance needs.

OWASP Dependency Check at a Glance

This table breaks down the tool’s core functions, supported technologies, and its direct relevance for development and compliance teams working towards CRA readiness.

| Feature | Description | Relevance for CRA Compliance |

|---|---|---|

| Dependency Scanning | Identifies third-party and open-source components in your project across various languages and build systems (e.g., Maven, Gradle, npm, NuGet). | Directly supports the CRA requirement to identify all components in a product, forming the basis of your SBOM. |

| Vulnerability Detection | Correlates identified components with known vulnerabilities listed in the NVD and other sources, assigning severity scores (CVSS). | Fulfills the obligation to ensure products are placed on the market “without known exploitable vulnerabilities.” Essential for risk assessment. |

| Multiple Report Formats | Generates reports in various formats, including HTML, XML, JSON, and SARIF, for easy integration into different workflows. | Provides the tangible evidence needed for Annex II and Annex VII technical documentation, showing due diligence in vulnerability identification and management. |

| False Positive Suppression | Allows teams to create suppression files to manage and document false positives, reducing noise and focusing on genuine risks. | Crucial for maintaining accurate and actionable vulnerability data, demonstrating a mature process for risk evaluation as expected by regulators. |

| CI/CD Integration | Offers plugins for popular CI/CD tools like Jenkins, GitLab CI, and GitHub Actions, enabling automated scans on every build. | Embeds security into the development lifecycle (“secure by design”), a core principle of the CRA. Enables rapid detection and remediation of new vulnerabilities. |

By leveraging these features, teams can build a robust, automated, and compliant vulnerability management process that stands up to the scrutiny of modern cybersecurity regulations.

Running Your First Scan in Under 10 Minutes

Right, enough theory. Let’s get our hands dirty and run your first OWASP Dependency-Check scan. I’ll walk you through the most common ways to get it running, so you can go from zero to a full vulnerability report in just a few minutes.

A quick heads-up: the very first time you run the tool, it needs to pull down the entire National Vulnerability Database (NVD). This can take a while, so it’s a perfect excuse to grab a coffee. The good news is that after this initial setup, subsequent scans are much, much faster as it only needs to fetch updates.

The Command Line for Quick Local Scans

The Command Line Interface (CLI) is the most direct way to kick off a scan. It’s perfect for running quick, ad-hoc checks on your local machine or for scripting in environments where you don’t have a build tool like Maven or Gradle handy.

Once you’ve got the CLI installed and added to your system’s PATH, just navigate to your project’s directory. For this example, let’s imagine we’re checking a simple Node.js project containing a package.json and a node_modules folder.

You’d run this exact command from the root of your project:

dependency-check --scan . --project "MyWebApp" --format HTML

Let’s quickly break that down:

--scan .tells the tool to scan everything in the current directory, including thenode_modulesfolder where your dependencies live.--project "MyWebApp"is just a friendly name that will show up in the report.--format HTMLspecifies the output, giving you a clean, easy-to-read report.

Once it’s done, you’ll find a dependency-check-report.html file sitting in your directory. This kind of immediate feedback is fantastic for developers who want to check their work before committing code. Honestly, just understanding the components that make up your application is a huge first step in securing your digital products, a topic we explore in our guide on software for your supply chain.

Seamless Integration with Maven and Gradle

If you’re working on a Java project, the best way to do this is by plugging Dependency-Check directly into your build process. This makes security scanning a natural part of every single build, not some special task you have to remember to do later.

For Maven Users

With Maven, all you need to do is add the plugin to your pom.xml. From there, triggering a scan is just a single command.

Add this snippet to the <plugins> section of your pom.xml:

<plugin>

<groupId>org.owasp</groupId>

<artifactId>dependency-check-maven</artifactId>

<version>9.2.0</version> <!-- Use the latest version -->

<executions>

<execution>

<goals>

<goal>check</goal>

</goals>

</execution>

</executions>

</plugin>

With that configuration in place, you can now run a scan from your terminal:

mvn org.owasp:dependency-check-maven:check

The report will pop up in your project’s target directory. Simple as that.

For Gradle Users

The process is just as straightforward for Gradle. Just add the plugin to your build.gradle file:

plugins {

id 'org.owasp.dependencycheck' version '9.2.0' // Use the latest version

}

And then run the scan from your terminal:

./gradlew dependencyCheckAnalyze

This command runs the analysis and drops the report right into the build/reports folder.

By embedding the scan into your build tool, you make security an unavoidable checkpoint. It’s a powerful cultural shift that encourages developers to think about vulnerabilities as they write code, not weeks later during a security audit.

Ultimate Portability with Docker

But what if you aren’t in a Java environment, or you just want a completely isolated, portable way to run scans? This is where the official Docker image shines. It packages the CLI and all its dependencies into a neat container, guaranteeing consistent results on any machine.

This command mounts your current project directory (pwd) to the container’s /src directory, and it also creates and mounts a reports directory for the output. It’s an incredibly clean way to run the tool. For instance, to scan a Python project that uses a requirements.txt file, you could run:

docker run --rm

-v $(pwd):/src

-v $(pwd)/reports:/report

owasp/dependency-check

--scan /src

--format ALL

--project "MyDockerProject"

--out /report

I’m a huge fan of this approach because it avoids any potential conflicts with Java versions or other tools on your local machine. It has become my go-to for CI/CD pipelines where having a clean, repeatable environment is absolutely critical.

Fine-Tuning Scans and Taming False Positives

Getting your first Dependency-Check report is a great start, but the real power comes from moving beyond the defaults. A raw, untuned scan can be noisy, inefficient, and slow—especially when you’re trying to integrate it across a development team. Let’s look at how to refine the process to make your scans faster, smarter, and far more actionable.

A classic pain point is the time it takes for every developer or CI/CD agent to download the entire NVD dataset. When multiple pipelines kick off at once, each one pulling the same large dataset, you’re just wasting time and network bandwidth. It’s a bottleneck that can completely undermine your automation efforts.

Centralise NVD Data to Speed Up Scans

The most effective fix is to stop treating the NVD data as a disposable, per-scan download. Instead, you can set up a centralised, shared database that all your scans point to. This is a genuine game-changer for CI performance.

You can use a central database like PostgreSQL, MySQL, or even a shared H2 instance. For example, to get your scans using a shared PostgreSQL database, you just need to update your command with the right connection details.

Here’s what that looks like in practice for the CLI:

dependency-check.sh

--scan .

--project "MyProject"

--format HTML

--dbDriver org.postgresql.Driver

--dbConnectionString "jdbc:postgresql://your-db-host:5432/dependencycheck"

--dbUser "dc_user"

--dbPassword "your_secure_password"

This tells Dependency-Check to connect to your central database instead of creating a local one. The very first scan will take a while to populate it, but every scan after that will be dramatically faster. They’ll only need to fetch updates, not the entire historical dataset.

Keeping Your Vulnerability Data Fresh

Once you have a central database, you have to make sure it doesn’t get stale. The best way to handle this is with a dedicated, scheduled job that runs once a day to update this central NVD cache.

This single update job uses the --updateonly flag. For example, you could set up a daily cron job that executes this command. It does nothing but refresh the vulnerability data, ensuring all other scans relying on this database get the latest intelligence without performing the slow update themselves.

# This command ONLY updates the database, it doesn't run a scan

dependency-check.sh --updateonly --dbDriver org.postgresql.Driver

--dbConnectionString "jdbc:postgresql://your-db-host:5432/dependencycheck"

--dbUser "dc_user" --dbPassword "your_secure_password"

With that in place, all your other CI jobs can run with the --noupdate flag. This forces them to use the existing central data, making them incredibly fast.

In Europe’s DevSecOps landscape, OWASP Dependency-Check is a standout free SCA tool, especially for the diverse tech stacks of EU IoT vendors facing CRA deadlines. An empirical study on continuous security highlighted its pipeline integration, noting its use of OWASP and NIST feeds. For teams in the ES region, where supply-chain risks are a major concern, the tool’s automatic updates are vital for mitigating threats in the open-source ecosystems powering thousands of EU projects. You can explore more about these continuous security practices in the full research paper.

The Art of Suppressing False Positives

Now for the most critical part of fine-tuning: managing false positives. A report filled with irrelevant warnings is a report that gets ignored. Dependency-Check sometimes makes an incorrect guess about a library’s identity—its CPE, or Common Platform Enumeration—and flags a vulnerability that doesn’t actually apply.

This is where a suppression file comes in. It’s a simple XML file where you explicitly tell the tool, “I’ve reviewed this finding, it’s not a real risk here. Ignore it.” This simple act cleans up your reports, letting your team focus only on genuine threats.

A clean, curated report builds trust. When your team knows that every flagged CVE is a real, actionable issue, they are far more likely to take security seriously and prioritise fixes.

Let’s walk through a real-world scenario. Imagine your scan flags commons-text-1.9.jar with CVE-2022-42889, a critical RCE vulnerability. Your team investigates and determines that the vulnerable function is never actually called in your application.

To suppress this, you create an XML file, let’s call it vulnerability-suppressions.xml, and add the following:

<?xml version="1.0" encoding="UTF-8"?>

<suppressions xmlns="https://jeremylong.github.io/DependencyCheck/dependency-suppression.1.3.xsd">

<suppress>

<notes><![CDATA[

File: commons-text-1.9.jar

We have confirmed that the vulnerable Text4Shell functionality is not used in our application.

This finding has been reviewed and accepted by the security team on 2024-10-26.

]]></notes>

<sha1>aae2b8e90691be7c277b10a278f84219b1c953c4</sha1>

<cve>CVE-2022-42889</cve>

</suppress>

</suppressions>

Then, you just point your scan to this file using the --suppression argument:

dependency-check.sh --scan . --suppression "vulnerability-suppressions.xml"

The <notes> section here is absolutely crucial. It documents why you suppressed the finding, creating an audit trail for future team members or compliance reviewers. This transforms your suppression file from a simple ignore list into a valuable piece of security documentation.

Reading Reports and Prioritising Fixes

Running an OWASP Dependency-Check scan is a great first step, but it’s just that—a first step. The real win isn’t just finding vulnerabilities; it’s about fixing the ones that pose a genuine threat to your product. This means you need to get good at reading the reports, prioritising fixes intelligently, and turning a long list of data into a clear action plan.

Without a solid strategy, a massive list of CVEs can feel completely overwhelming. This is how “vulnerability fatigue” sets in, and soon enough, teams start tuning out the noise. Let’s break down how to read the reports and, more importantly, how to decide which findings need your attention right now.

Decoding the HTML Report

For most teams just getting started, the HTML report is the best place to begin. It’s visual, it’s interactive, and it’s built for human eyes. The first time you open it, you’ll immediately see the high-severity vulnerabilities, usually flagged in bright red.

Here’s what you should focus on immediately:

- Dependency Name: The exact library or component with the vulnerability. For example,

log4j-core-2.14.1.jar. - Highest Severity: This shows the CVSS score of the most critical vulnerability tied to that dependency.

- CVE Count: The total number of unique CVEs found in that one component.

- Evidence: This is a crucial section. It shows how Dependency-Check identified the component, which gives you confidence that it’s not a false positive.

When you click on a specific dependency, the view expands to show you every single CVE. Pay close attention to the CVSS (Common Vulnerability Scoring System) score. It’s a standardised number from 0 to 10 that rates the severity of a flaw. As a rule of thumb, anything 9.0 or higher is considered critical and should trigger an immediate investigation.

Choosing the Right Report Format for Your Needs

The HTML report is perfect for manual reviews, but different situations demand different formats. Knowing which one to use is key to integrating Dependency-Check into your broader workflows, whether that’s an automated pipeline or your compliance documentation.

Here’s a quick rundown of the most common formats and where they fit best.

Choosing the Right Report Format for Your Needs

| Report Format | Primary Use Case | Key Feature | Best for… |

|---|---|---|---|

| HTML | Manual review and analysis by development teams. | Interactive, visual, and easy to navigate. | Initial triage, team discussions, and sharing findings with stakeholders. |

| XML/JSON | Machine-to-machine integration and custom scripting. | Structured, machine-readable data. | Feeding scan results into other tools like Dependency-Track or custom dashboards. |

| SARIF | Integration with security platforms and IDEs. | Standardised format for static analysis results. | Uploading findings to GitHub Security, Azure DevOps, or other SAST platforms. |

| CSV | Data analysis and spreadsheet-based tracking. | Simple, tabular format. | Importing data into spreadsheets for tracking, metrics, and reporting. |

In a real-world scenario, you might have a CI/CD pipeline that generates an XML report to be automatically ingested by your vulnerability management platform. At the same time, it could archive the HTML report so developers can quickly review the details if the build fails. This multi-format approach makes sure the data is useful for both machines and people.

A Practical Framework for Prioritisation

Okay, your report is back with ten new vulnerabilities. Where do you even start? Chasing the highest CVSS score isn’t always the smartest move. An effective prioritisation strategy looks at risk in the context of your specific application.

Here’s a simple, three-step framework that works:

Start with CVSS Score: First, filter for anything rated Critical (9.0-10.0) or High (7.0-8.9). These are your non-negotiable starting points. A practical example would be immediately flagging a library with a 9.8 CVSS score for a remote code execution (RCE) vulnerability.

Assess Exploitability: Is there known public exploit code available for this vulnerability? A flaw with a ready-made exploit on GitHub is infinitely more dangerous than a purely theoretical one. Look for tags like “Exploit-DB” or “Metasploit” in the vulnerability details.

Consider Business Context: This is the most important part. You have to ask your team: Is the vulnerable part of this library actually used by our application? If a flaw exists in a function your code never calls, the immediate risk drops significantly. This type of contextual analysis is a critical part of the overall process for CRA vulnerability handling, as it demonstrates a mature, risk-based approach.

A CVSS 7.5 vulnerability in a core authentication library with a known public exploit is a much higher priority than a CVSS 9.8 vulnerability in an unused admin reporting feature. Context is everything.

This approach shifts your team’s mindset from being simple vulnerability finders to becoming true risk managers.

Research backs this up. An empirical study of open-source projects found that teams who adopted OWASP Dependency-Check saw a significant drop in high-severity vulnerabilities. This success is directly tied to the tool’s CPE matching against the NVD feeds, with some early adopters slashing their vulnerability incidence rates by up to 40%. You can explore the impact of SCA tools in this cohort study for a deeper look. By systematically analysing your reports and acting on them with context, you can achieve the same results.

Shifting Left: Automating Scans in Your CI/CD Pipeline

Running an OWASP Dependency-Check scan manually is a great start, but let’s be honest—it relies on someone remembering to do it. The real goal is to make security scanning an invisible, unbreakable part of your development process. This is exactly what integrating it into your Continuous Integration and Continuous Deployment (CI/CD) pipeline achieves. It transforms vulnerability scanning from a periodic chore into a constant, automated reflex.

When you wire up scans directly into your pipeline, you’re essentially putting security on autopilot. Every single commit, every pull request, gets automatically scrutinised for known vulnerabilities long before it has a chance to hit your main branch. This isn’t just about moving faster; it’s a fundamental shift towards a genuine DevSecOps culture.

This simple workflow shows the core idea: scan, analyse, and prioritise, all automatically.

This automated loop ensures every change is consistently checked, making security a seamless part of the development cycle instead of a manual task tacked on at the end.

A Production-Ready GitHub Actions Workflow

For teams on GitHub, setting up an automated scan with GitHub Actions is surprisingly easy. This workflow does more than just run a scan; it acts as a security gate. It will automatically fail the build if it finds a vulnerability above a certain severity, effectively blocking vulnerable code from ever being merged.

Here’s a practical, production-ready example you can drop into your own repository. Just create a file at .github/workflows/dependency-check.yml:

name: OWASP Dependency-Check Scan

on:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

dependency-check:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Set up JDK 11

uses: actions/setup-java@v4

with:

java-version: '11'

distribution: 'temurin'

- name: Run Dependency-Check

uses: dependency-check/Dependency-Check_Action@main

id: DepCheck

with:

project: '${{ github.repository }}'

scan: '.'

format: 'HTML'

failOnCVSS: '8' # Fail build if any CVE has a CVSS score of 8 or higher

autoUpdate: true

- name: Upload Report

uses: actions/upload-artifact@v4

if: success() || failure() # Always upload the report

with:

name: dependency-check-report

path: ${{ id.DepCheck.outputs.reportPath }}

This setup accomplishes three critical things:

- Triggers on Push and Pull Requests: It scans code targeting your

mainbranch, catching problems early. - Fails the Build on High-Severity Findings: The

failOnCVSS: '8'line is your gatekeeper. Any dependency with a critical vulnerability stops the build cold. - Archives the Report: The HTML report is saved as a build artefact, so developers can easily click through and see exactly what needs fixing.

Jenkins Integration for Maven Projects

If your team uses Jenkins, integrating the scan is just as straightforward. You can add a stage to your Jenkinsfile to run the Maven plugin and handle the results, making dependency scanning a standard part of every build.

Just add this stage to your declarative Jenkins pipeline:

pipeline {

agent any

stages {

stage('Build') {

steps {

// Your normal build steps here...

sh 'mvn clean install'

}

}

stage('OWASP Dependency-Check') {

steps {

// Run the dependency-check maven plugin

sh 'mvn org.owasp:dependency-check-maven:check'

}

post {

always {

// Archive the HTML report for every build

archiveArtifacts artifacts: 'target/dependency-check-report.html', fingerprint: true

}

}

}

}

}

This Jenkinsfile executes the OWASP Dependency Check immediately after the build stage. The post block is key—it ensures the HTML report is always archived, successful build or not, giving you a consistent audit trail.

Key Takeaway: The goal of CI/CD integration is to make the secure path the easiest path. When scans are automated and builds fail on critical findings, developers are empowered to fix issues immediately, long before they become a security incident.

Best Practices for Pipeline Integration

Beyond the initial setup, a few best practices will make your automated scanning far more effective. Running scans on pull requests, as shown in the GitHub Actions example, is non-negotiable. It gives feedback directly to the developer before their code gets merged, which makes fixing things faster and cheaper.

This automated scanning process also produces crucial evidence for compliance. The reports serve as tangible proof of due diligence, which is essential for standards like the EU’s Cyber Resilience Act. The structured data can also be used to generate a Software Bill of Materials (SBOM), another key compliance artefact. For a detailed breakdown of what’s needed, you can read our guide on CRA SBOM requirements. By automating these checks, you’re not just improving security—you’re building a scalable compliance engine.

Answering Your Top Questions About Dependency Check

Once you start integrating OWASP Dependency Check into your day-to-day workflow, you’ll inevitably run into a few common questions. Moving from theory to practice always uncovers the same hurdles and points of confusion. Here are the top questions we hear from developers, with direct, actionable answers to help you master the tool.

How Can I Speed Up the Initial Scan?

That first scan can feel painfully slow. It has to download the entire National Vulnerability Database (NVD), and while that’s a one-time pain for a single user, it’s a massive bottleneck in any CI/CD environment. Waiting it out isn’t your only option.

A professional setup almost always involves creating a local NVD mirror or a central database. This way, only one machine is responsible for fetching updates from the source, and all your CI runners or developers point to this single, internal location. For CI jobs that run frequently, you can also strategically use the --noupdate flag, forcing the scan to use its existing data and dramatically cutting scan time.

Don’t overlook the basics, either. Dependency-Check is a Java application. If the process is starved for memory, it will absolutely crawl. Make sure you’ve allocated enough heap space to the JVM, especially when you’re scanning larger projects. A simple way to do this before running the CLI is to set an environment variable: export JAVA_OPTS="-Xmx4g".

What’s the Difference Between Dependency Check and Dependency Track?

This is a really common and important point of confusion. The two tools are designed to work together, but they serve completely different purposes.

OWASP Dependency-Check is the scanner. Its job is to perform a point-in-time analysis of a project. It identifies your dependencies and spits out a report of known vulnerabilities at that specific moment.

OWASP Dependency-Track is the dashboard or vulnerability management platform. It’s built to ingest the reports (usually in XML or SARIF format) that Dependency-Check generates.

Think of it this way: Dependency-Check takes a single snapshot of your security posture. Dependency-Track collects all those snapshots over time, across all your projects, giving you a continuous, historical view of your organisation’s risk. You run the scanner in your CI pipeline and feed the results into the dashboard for long-term monitoring.

How Do I Fix a False Positive from Bad CPE Matching?

Sometimes, a simple XML suppression rule isn’t the right tool for the job. This is especially true if the scanner has fundamentally misidentified a component because of a bad Common Platform Enumeration (CPE) match. For instance, the scanner might mistakenly flag an internal utility library as a common open-source package with a similar name, leading to a flood of totally irrelevant CVEs.

Instead of suppressing dozens of CVEs one by one, you can give the scanner a ‘hint’ to correct the identification. The hint analyser lets you supply evidence in an XML file to guide the scanner to the correct CPE.

Let’s say the tool incorrectly identifies my-internal-utils.jar as a vulnerable public library. You can create a hints.xml file like this:

<hints xmlns="https://jeremylong.github.io/DependencyCheck/dependency-hint.1.0.xsd">

<hint>

<given>

<evidence type="vendor" source="pom" name="my-company" confidence="HIGH"/>

<evidence type="product" source="pom" name="internal-utils" confidence="HIGH"/>

</given>

<add>

<evidence type="product" source="hint" name="my-internal-utils" confidence="HIGHEST"/>

<evidence type="vendor" source="hint" name="my-company-internal" confidence="HIGHEST"/>

</add>

</hint>

</hints>

By passing this file with --hint my-hints.xml, you’re telling the scanner to trust your evidence over its own analysis for that specific component. It’s a much more precise fix that prevents future false positives and keeps your main suppression file clean.

Navigating the Cyber Resilience Act requires more than just scanning; it demands a clear strategy and robust documentation. Regulus provides a complete platform to assess CRA applicability, map requirements, and generate the necessary technical files and evidence. Stop wrestling with spreadsheets and gain confidence in your compliance roadmap. Discover how Regulus can prepare you for the 2025–2027 deadlines.